Neural Nets and Optimization Algorithms (Fully Connected and Convolutional Neural Nets)

It was a great learning experience implementing:

- Fully connected Networks

- Convolutional Neural Networks

- Batch Normalization (was recently proposed)

- Drop Out

- A New Optimization Algorithm, I came up with

- Modifications to Batch Normalization to consider Cumulative Moving Averages of Mean and Variances rather than Exponential Moving Averages.

all on CIFAR-10 dataset.

This dataset has 10-classes.

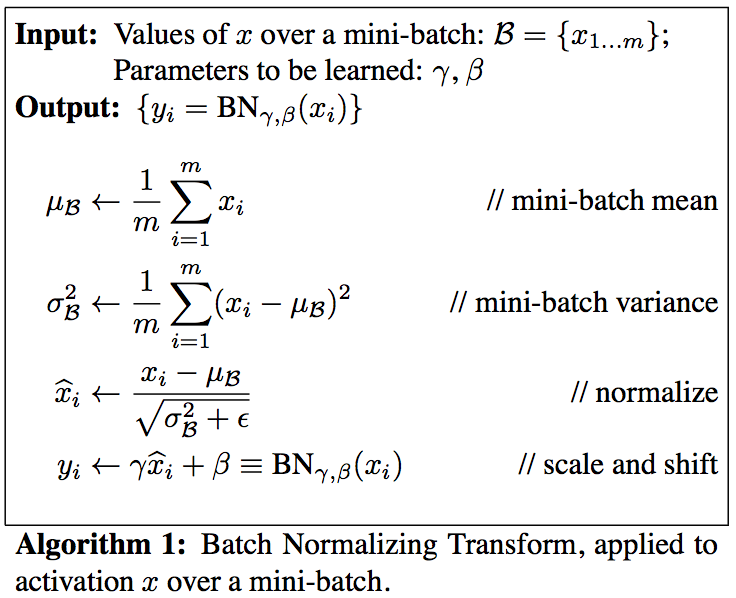

Batch Normalizaion

As I will show below, Batch Normalization is a significant improvement. So it deserves a small section of its own.

Paper (linked above), proposed to center activity of hidden units to zero mean and unit variance, by doing following in forward propagation:

This is simple. However back propogation through this function does the trick! Basically it proposes following main things:

- Center and normalize using mini-batch samples.

- Since mean and variance are based on current mini-batch samples, back propagation must consider them as variables.

- and are proposed, so as to cover identity transform in the solution space. (I find it very interesting that some major breakthough papers (like ResNet) also are designed around covering identity function in solution space)

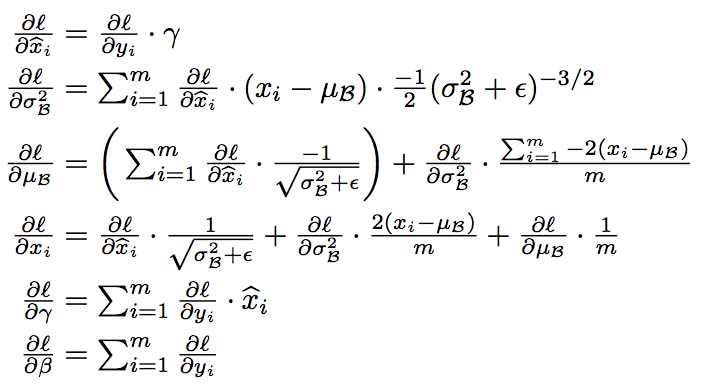

So, backward propagation becomes:

However, during test (or validation) we simply ignore mini-batch sample mean/variance but rather using mean/variance calculated from whole data.

This can be done in various ways, like I describe here

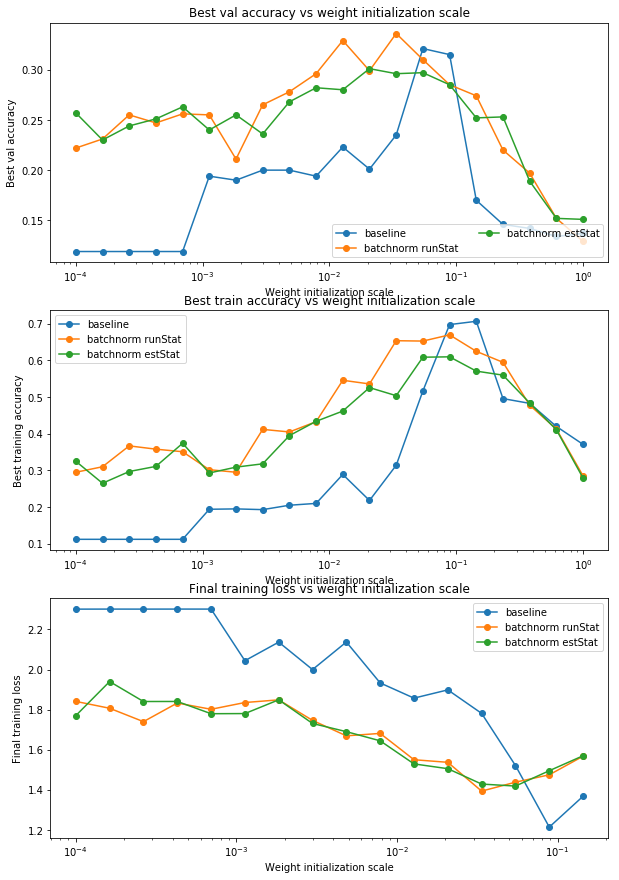

Biggest advantage of Batch Normalization is that it makes network much less sensitive to weight initializations, as shown below. This is a huge saving in time people spend in setting correct weight initializations.

As seen above, baseline, which doesn’t implement Batch Normalization, is very sensitive to weight initializations. There is a narrow window of weight initializations, where accuracy is high, otherwise bad (really bad)

However with Batch Normalization, there is a wide window where accuracy is reasonable and doesn’t fall off as sharply.

Source code for Batch Normalization in Python/Numpy can be found here

Description of project

This project is completely implemented using Python, Scipy and Matplotlib libraries. Also all evaluations are done on my Macbook, so runs slower compared to GPU based machines.

Thus networks are not very deep.

Full Connected Network architecture I tried:

[Affine - [batch norm] - ReLU]x5 - Affine - softmax

(each hidden layer was 100x units)

Convolutional Neural Network I tried was shallower than Fully Connected network mentioned above, but achieves much higher accuracy!

conv - [spatial batch norm] - relu - 2x2 max pool - affine - [batch norm] - relu - affine - softmax

(Input dimension: 3x32x32; Conv net size 32x3x7x7; hidden layer units 100x)

(basically only 3 layers with tunable parameters rather than 6 in Fully Connected above)

I will implement all these in TensorFlow soon!

Since Fully Connected results are not very interesting, I won’t show them here but can be viewed by interested reader here

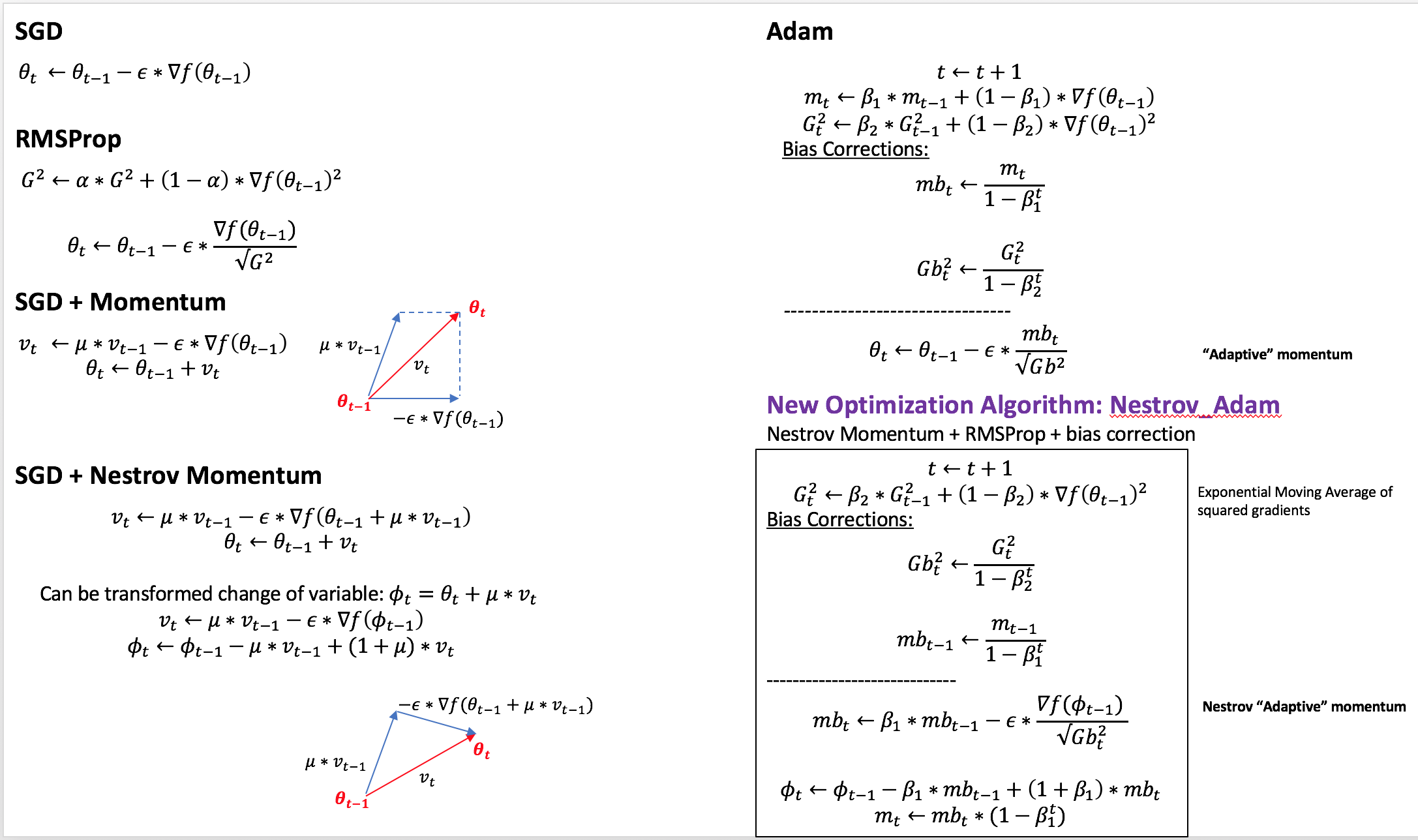

I am exited to share a new optimization algorithm I tried, which combines Nestrov Momentum, RMSProp and bias corrections as proposed for Adam algorithm.

Here is a glossary of various famous algorithms used in Neural Network world quite often:

- SGD

- SGD with Momentum

- SGD with Nestrov Momentum

- RMSProp

- Adaptive momentum (Adam)

This link describes all of above in great detail.

Nestrov Momentum in my studies almost always performs better than simple Momentum.

So, I tried a combination of Nestrov Momentum with RMSProp.

Following picture describes mathematics behind various algorithms and hopefully you can find a justification of what I am proposing.

All of the implemented algorithms can be found here

Results

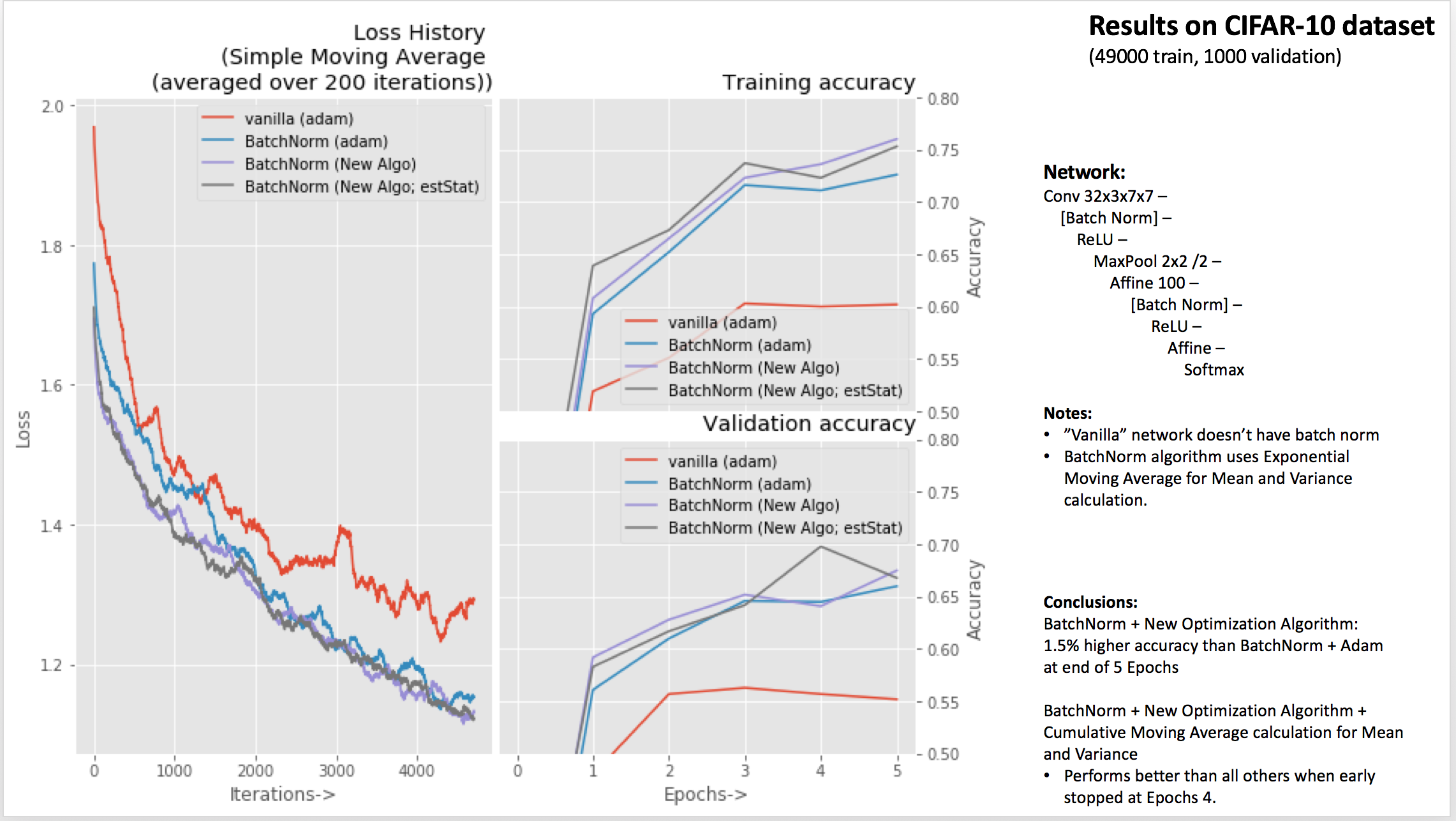

Now lets look at results of my experiments with Batch Normalization and New Algorithm (Nestrov_Adam) I propose.

Labels:

- “vanilla (adam)”: Is just basic Convolution Network (described above) without any Batch Normalization layer.

- “BatchNorm (adam)”: Is Convolution Network (described above) with Batch Normalization layers.

- “BatchNorm (New Algo)”: Is Convolution Network (described above) with Batch Normalization layers and Nestrov Adam optimization algorithm (described above) and uses Exponential Moving Averages for mean and variances.

- “BatchNorm (New Algo; estStat)”: Is Convolution Network (described above) with Batch Normalization layers and Nestrov Adam optimization algorithm (described above) and uses Cumulative Moving Averages for mean and variances.

Conclusions

As can be seen in results above, just placing Batch Normalization layer in Convolutional Neural Network achives significant accuracy gains (>9%) on both training and validation sets.

Further Nestrov Adam algorithm achives good gains on top of Batch Normalization. On average, it achieves lower loss across all iterations, higher train and validation accuracies than most of first order optimization algorithms used in Deep Learning community.