Unsupervised Learning - Expectation Maximization

Expectation Maximization is a popular generative algorithm, which elegantly handles Multi-Variate Distributions and tries to find close approximations to underlying data-generating distribution.

For fun, I derived the Multi-Variate Expectation Maximization algorithm, using Mixture of Gaussians. Interested readers can find it here

In following I will consider a particular family of exponential distributions, the Normal Distribution, which for 1-dimensional data x:

(subscripts are for N data points and K Normal Distributions which try to approximate data distribution.

For multidimensional data (s.t. ), Normal Distribution is,

where is covariance matrix for (or )

For following discussion, we will mainly consider 1-dimensional data x (start from something simple and grow complex!)

EM Algorithm looks like this:

Repeat until convergence:

-

find

(Can be interpretted as probability of (or ), given data point is observed.) -

find

(Can be interpreted as Expected number of data points which belong to (or ) -

find

(updated prior of the (or )) -

find

(weighted mean of (or )) -

find …[1]

Now lets see this algorithm fitting 5-Gaussians (K=5) on a gray-scale image shown below. Size of this image=300KB

After running EM algorithm on this image, trying to fit 5-Gaussians, we get following compressed image=100KB

(Note that actual storage size on disk < 10KB, as this image is actually stored as:- an array of 8 bit integers, each location specifying which cluster it belongs to (that is, most likely Gaussian for that data point).

- And only 15 64-bit floating point numbers, with 5 for , 5 for , 5 for .)

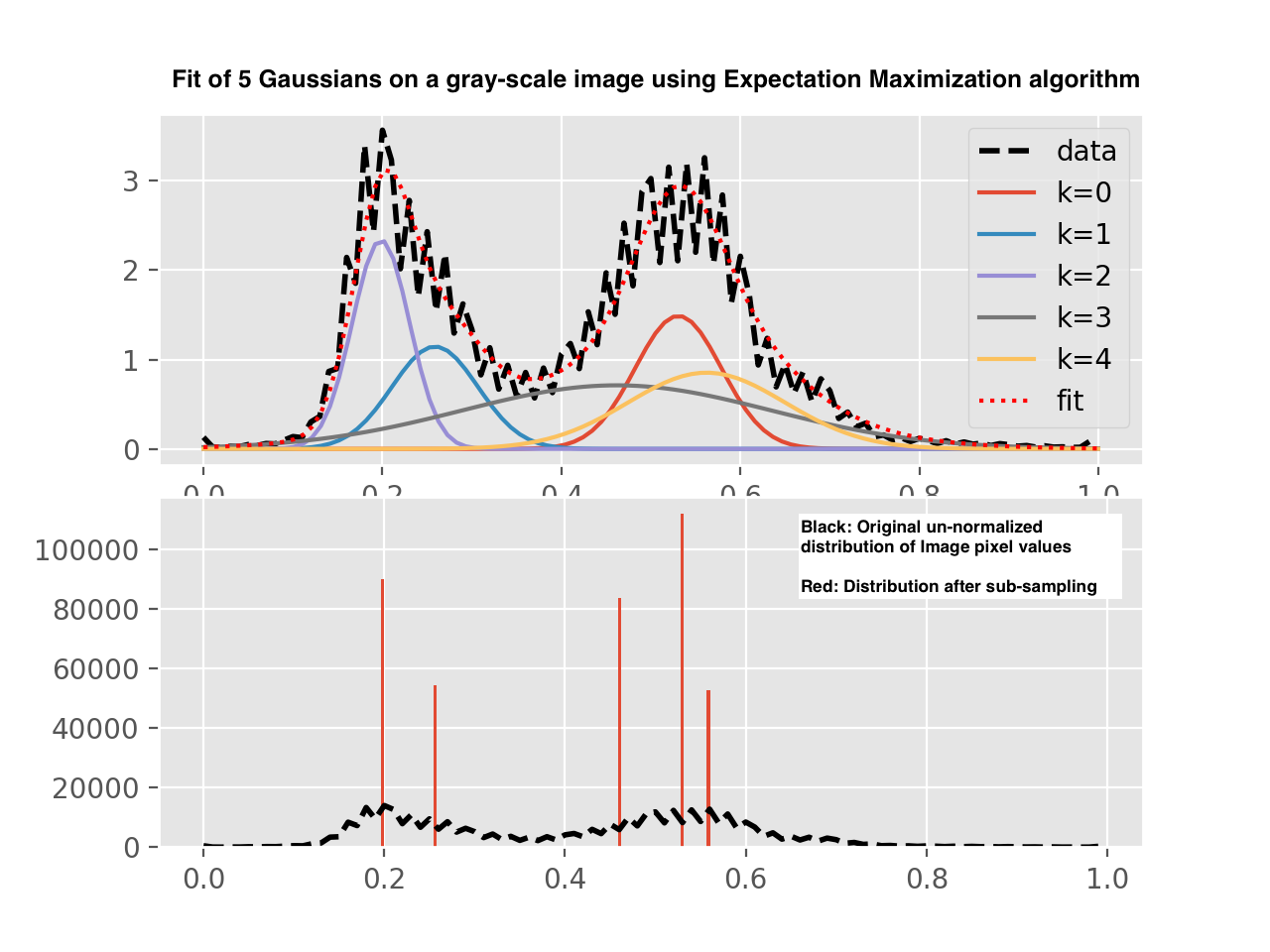

Following is the statistical analysis of what original image distribution was, how 5-Gaussians were fitted and distribution after sub-sampling to prove that only few unique values are sufficient to describe the compressed image.

As seen above, 5-Gaussians fit (Red dotted line in top half) matches closely to actual data distribution. But is of-course a smoothed approximation.

Bottom-half in picture above compares histogram of sub-sampled image (each pixel value replace with most likely Gaussian), and histogram of original image.

[1]: it is important to interpret

Let us say (and of course also ) So,